Coding and testing when you know what you want the computer to do

Reminder of Intro and Debugging 1 & 2: AI is BS, but not useless; You can prompt it with an error message and get suggestions, you can give it an example of code that is not doing what you think it should do. Tell it who you want it to be and keep asking questions if necessary.

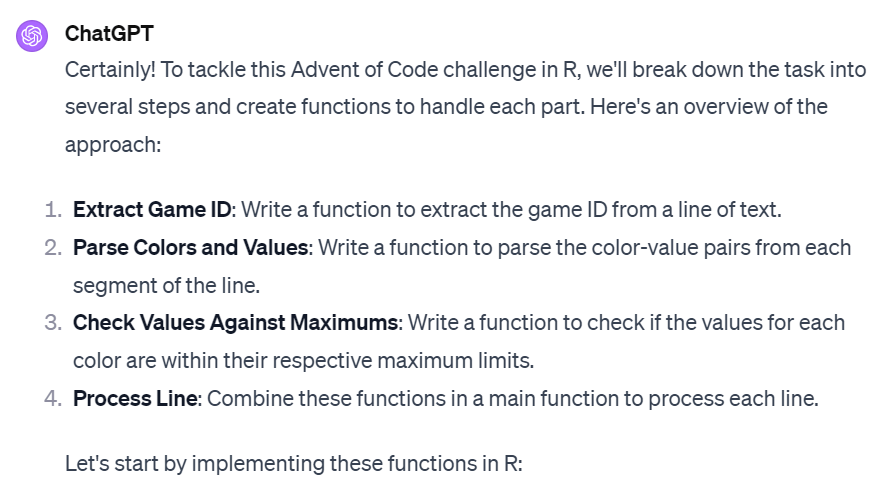

Next idea: If you have not written the code, if you know what you want it to do, you can ask a chatbot to write it for you.

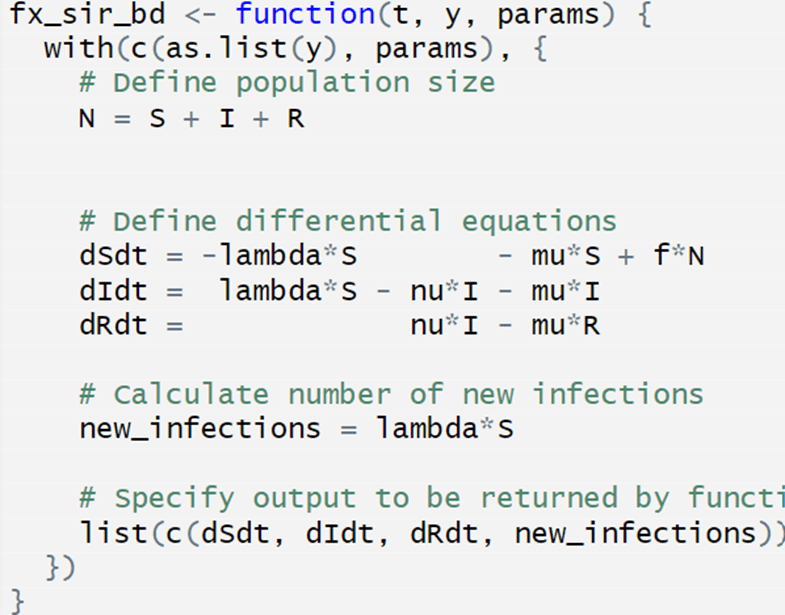

This works particularly well with functions. Example, a function for the SEIR model in a closed cohort, start with:

fx_seir <- function(t, y, params) {

You are responsible for what comes out. That means you must test it. Link to an example to explore.

Don’t know just how to test? A chatbot can help with that, too, e.g. prompt of how can I test fx_seir to make sure it is doing what I want?

You can write the tests first (“test-driven development” is the name for this in software engineering); this turns the process of writing code into a game (and if you like this game, you might be a software engineer!)

Lauren Wilner (Epi 560 TA) says: Try to use AI to help you build on things you already know. For example, if you are told to write a loop but you already know how to do what you want to do by copying and pasting 10 times, write out the code you would write (in 10 lines with copy and pasting and changing little things) and then ask ChatGPT to give you advice on putting it into a loop. That way, you can run your code both using what you wrote and what ChatGPT gave you.

Lauren Wilner (Epi 560 TA) says: for testing code I have found that ChatGPT is very good at simulating data. I will often simulate my own data, write a function, and at the point I think that I’m done, I will give my function to ChatGPT and tell it to simulate data with whatever parameters I am using and see if it is able to replicate my work. This isn’t relevant for our students necessarily, but I do think it is very helpful – it acts sort of like a second pair of eyes.

Understanding, explaining, and documenting code (aka “what is this doing?”)

Reminder of Intro and Debugging 1 & 2: AI is BS, but not useless; You can prompt it with an error message and get suggestions, you can give it an example of code that is not doing what you think it should do. Tell it who you want it to be and keep asking questions if necessary.

Final idea: How to ask an AI to explain code. You can use this to help yourself understand code in the assignments, and you can even use it to document your code to help others, including your future self, understand your code.

For example, “Explain this code:

# Call lsoda function with initial conditions, times, SIR function, and parameters defined above.

output = pd.DataFrame(lsoda(y=init, times=t, func=fx_sir_bd, parms=params))

Lauren Wilner (Epi 560 TA) says: start prompt according to first precept, e.g. “Can you please pretend to be my stats professor and explain to me what the code below is doing and what the expected output is and what this code would be used for? Are there things you would change or improve?”

Keep asking questions if necessary!

Lauren Wilner (Epi 560 TA) says: If I inherit code, I generally ask ChatGPT to comment each line or function or code chunk with what it is doing and have found that very helpful.

You can also ask it to document or improve documentation in code you have written (or received).

For example, “Please improve the documentation in this code:

# Melt dataset for easy plotting

output_melted <- melt(data = output, id.vars = "time",

measure.vars = c("S", "I", "R"))

# Plot output - plot (1)

ggplot(data = output_melted) +

geom_line(aes(x = time, y = value, col = variable)) +

scale_color_manual(breaks = c("S", "I", "R"), labels = c("Susceptible", "Infected", "Recovered"), values = c("green", "red", "purple")) +

labs(x = "Time", y = "Number of individuals", col = "State") +

theme(panel.background = element_blank(),

panel.grid = element_blank(),

panel.border = element_rect(color = "black", fill = NA),

axis.title = element_text(family = "Times"),

axis.text = element_text(family = "Times"),

legend.key = element_blank(),

legend.text = element_text(family = "Times"),

legend.title = element_text(family = "Times"))

Lauren Wilner (Epi 560 TA) says: an additional prompt for this might be: “Can you please pretend to be my mentor and explain to me what the code below is doing and what the expected output is and what this code would be used for? Are there things you would change or improve?”

Lauren Wilner (Epi 560 TA) says: I love giving ChatGPT my code that I have finished and asking it to tell me what my code is doing. This both helps me with documentation as well as ensures that the code I wrote is doing what I think it is doing. If ChatGPT tells me something surprising about what my code is doing, I generally start a conversation saying “I thought I was doing X, can you explain to me why you think I am doing Y? Am I also doing X?” or something like that.

Final thought: Remember that you are responsible for what comes out. So don’t stop with what you get… read and edit it to improve. What might you improve in this result? https://chat.openai.com/share/5f118cc6-71f6-4dcb-b906-0a940f1eca16