Last June, I happened to attend an ACM Tech Talk about LLMs in Intro Programing which left me very optimistic about the prospects of AI-assisted programming for my Introduction to Epidemic Modeling course.

I read the book that the tech talk speakers were writing and decided that it was not really what my epi students needed. But it left me hopeful that someone is working on that book, too.

In case no one writes it soon, I’ve also been trying to teach myself how to use AI to do disease modeling and data science tasks. I just wrapped up my disease modeling course for the quarter, though, and I did not figure it out in time to teach it to my students anything useful.

In my copious spare time since I finished lecturing, I’ve been using ChatGPT to solve Advent of Code challenges, and it has been a good education. I have a mental model of the output of a language model as the Platonic ideal of Bullshit (in the philosophical sense), and using it to solve carefully crafted coding challenges is a bit like trying to get an eager high school intern to help with my research.

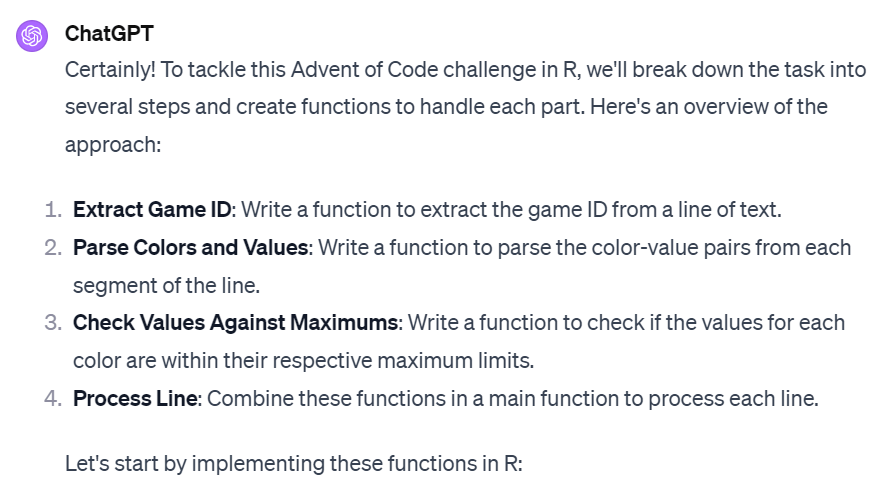

Here is an example chat from my effort to solve the challenge from Day 2, which is pretty typical for how things have gone for me:

The text it generates is easy to read and well formatted. Unfortunately, it includes code that usually doesn’t work:

It might not work, it might be BS (in the philosophical sense), but it might still be useful! I left Zingaro and Porter’s talk convinced that AI-assisted programmers are going to need to build super skills in testing and debugging, and this last week of self-study has reinforced my belief.

As luck would have it, I was able to attend another (somewhat) relevant ACM Talk this week, titled “Unpredictable Black Boxes are Terrible Interfaces”. It was not as optimistic as the intro programming one, but it did get me thinking about how useful dialog is when working with eager interns. It is very important that humans feel comfortable saying they don’t understand and asking clarifying questions. I have trouble getting interns to contribute to my research when they are afraid to ask questions. If I understand correctly, Agrawala’s preferred interface for Generative AIs would be a system that asked clarifying questions before generating an image from his prompt. It turns out that I have seen a recipe for that:

I am going to try the next week of AoC with this Flipped Interaction Pattern. Here is my prompt, which is a work in progress, and here is my GPT, if you want to give it a try, too.